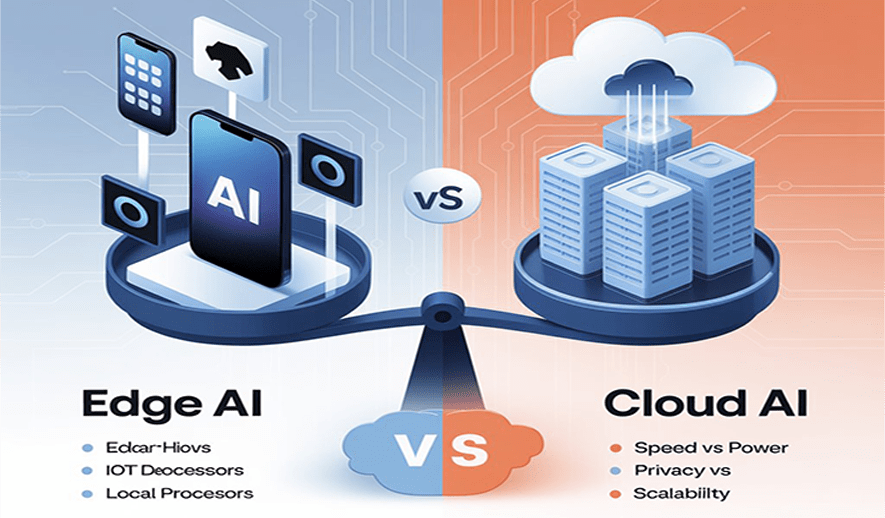

Edge AI vs Cloud AI: Trade-offs

Edge AI processes data locally on the device, while Cloud AI sends data to a central, remote server for processing. This fundamental difference creates a series of trade-offs regarding performance, scalability, security, and cost. The optimal choice depends on an application's specific requirements, and many modern systems use a hybrid approach to leverage the benefits of both.

Hybrid AI: Combining the best of both

Many real-world applications use a hybrid approach to gain the strengths of both Edge and Cloud AI. In this model:

- Edge devices handle immediate, latency-sensitive tasks like real-time fraud detection at a point-of-sale terminal.

-

The cloud is used for heavy, non-time-critical processes, such as:

- Training new, more powerful AI models with aggregated data from all edge devices.

- Long-term storage and analysis of collective data to identify broad trends.

- Deploying model updates back to the edge devices.

Real-world examples

- Self-driving cars: Edge AI handles instant decisions like braking to avoid a collision. The cloud receives aggregated, anonymized data over time to retrain and improve the overall driving model.

- Healthcare monitoring: A wearable device uses Edge AI to monitor a patient's vital signs and send an immediate alert if it detects a critical change. The full, anonymized medical data is sent to the cloud for in-depth, long-term diagnostic analysis.