Go Cloud-Native

Adopting a cloud-native approach with containers, APIs, and Kubernetes offers numerous benefits, fundamentally changing how applications are built and operated to take full advantage of cloud characteristics. Cloud-native apps are built as a collection of independent, loosely coupled services (microservices), enabling greater speed, agility, and scalability compared to traditional monolithic applications.

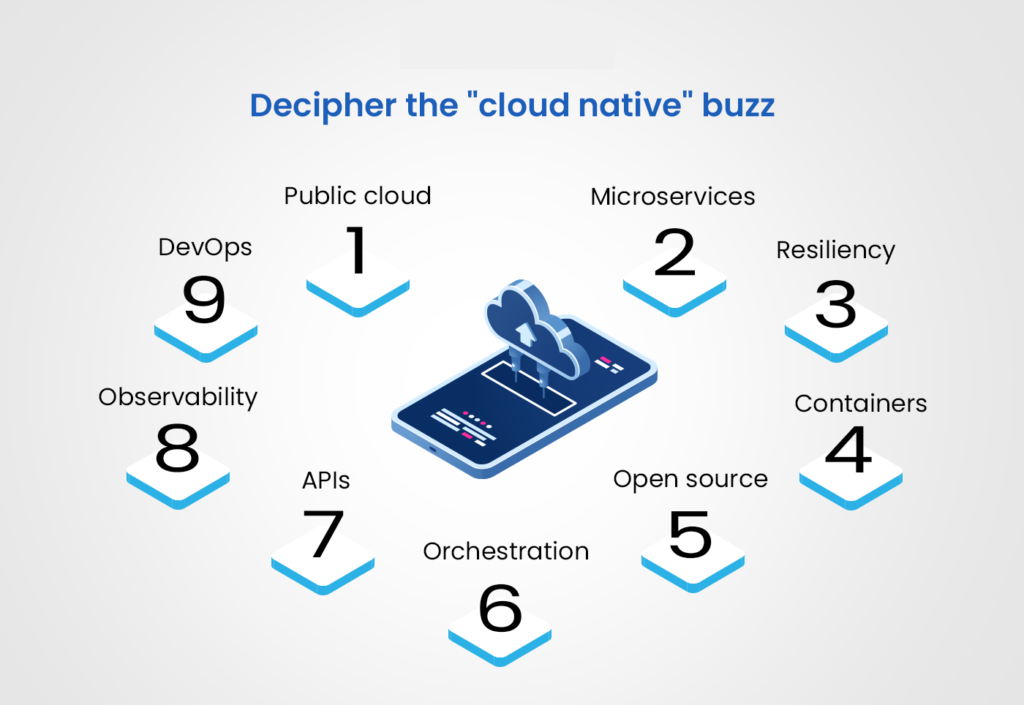

The key components of a cloud-native strategy

- Microservices: Instead of a single, large application (monolith), a cloud-native app consists of many small, independent services. Each microservice focuses on a specific business capability, allowing teams to develop and deploy updates for individual services without affecting the entire application.

- Containers: Containers package an application and all its dependencies—including the code, libraries, and runtime—into a single, isolated, and portable unit. This ensures the application runs consistently across different environments, from a developer's machine to production. Docker is a popular containerization tool.

- APIs: APIs (Application Programming Interfaces) enable the microservices to communicate with each other over a network. This standardized communication layer simplifies the interactions between services, which is critical for maintaining an agile and modular architecture.

- Orchestration (Kubernetes): As the number of microservices and containers grows, managing them becomes complex. Kubernetes is the de facto standard for container orchestration, automating the deployment, scaling, and management of containerized applications. It ensures your applications are resilient, highly available, and running on the most efficient resources.

The benefits of going cloud-native

1. Faster innovation and time-to-market

- Agile development: Small, independent microservices allow teams to work in parallel and autonomously, accelerating development cycles.

- Frequent releases: With automated Continuous Integration and Continuous Delivery (CI/CD) pipelines, development teams can build, test, and deploy new features and updates rapidly and reliably.

2. Enhanced scalability and resource optimization

- Horizontal scaling: Microservices can be scaled independently based on demand, enabling more efficient use of resources. For example, a video streaming service can scale up only its transcoding microservice during peak upload times.

- Elasticity: Orchestration tools like Kubernetes can automatically scale an application's resources up or down in real-time, matching traffic demands and optimizing costs.

- Cost-efficiency: The pay-as-you-go model, combined with optimized resource utilization through containers, leads to significantly lower operational costs by preventing overprovisioning.

3. Increased resilience and reliability

- Fault isolation: If one microservice fails, the entire application isn't affected. The issue is contained to that specific service, and the orchestrator can automatically restart it, ensuring high availability.

- Automated self-healing: Kubernetes continuously monitors the health of containers and can automatically restart or replace failed instances.

- Immutable infrastructure: By treating infrastructure as disposable components that are replaced rather than modified, cloud-native deployments become more predictable and less prone to configuration errors.

4. Operational flexibility and portability

- Vendor neutrality: Containers create a portable deployment unit, allowing applications to run consistently across any environment—public cloud, private cloud, or on-premises. This reduces vendor lock-in.

- Automated management: Orchestration and CI/CD automate many manual tasks, such as provisioning and deployment, reducing operational overhead and human error.

- Remote accessibility: A cloud-native development and deployment environment is accessible from anywhere, supporting distributed and remote workforces.